Celestia’s data availability layer

Data availability (DA) answers a simple question: has this block’s data been published and can it be downloaded? In other words, is the data available? When a node receives a new block, it must be able to retrieve the associated transaction data; otherwise, the chain can stall or be exploited. Celestia provides a modular DA layer so light nodes can verify availability efficiently without downloading whole blocks.

tl;dr

- Celestia is a modular data availability network: it orders blobs and keeps them available while execution and settlement live on layers above.

- It scales by decoupling execution from consensus and using data availability sampling (DAS) so light nodes can verify availability without downloading whole blocks; more light nodes sampling safely unlocks larger block sizes.

- Namespaced Merkle trees (NMTs) let each app fetch only its own namespaced data and prove availability.

- Block producers erasure-code blobs into a matrix, commit to every row and column, and put that root in the header.

- Light nodes sample within a rolling window; archival nodes (or providers) keep older data retrievable.

Celestia is a data availability (DA) layer that provides a scalable solution to the data availability problem . Due to the permissionless nature of the blockchain networks, a DA layer must provide a mechanism for the execution and settlement layers to check in a trust-minimized way whether transaction data is indeed available.

Two key features of Celestia’s DA layer are data availability sampling (DAS) and Namespaced Merkle trees (NMTs). Both features are novel blockchain scaling solutions: DAS enables light nodes to verify data availability without needing to download an entire block; NMTs enable execution and settlement layers on Celestia to download transactions that are only relevant to them.

Data availability sampling (DAS)

In general, light nodes download only block headers that contain commitments (i.e., Merkle roots) of the block data (i.e., the list of transactions).

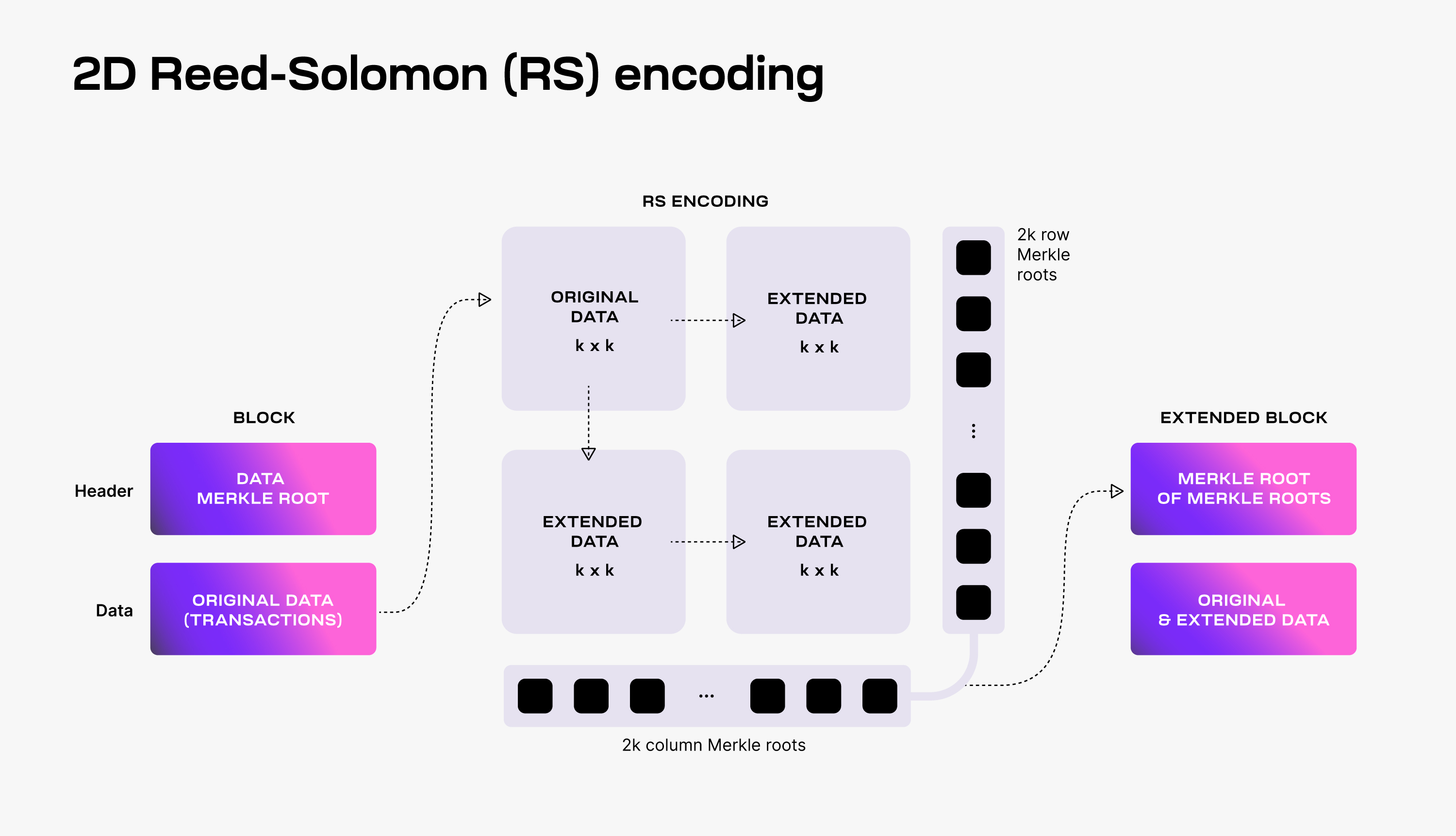

To make DAS possible, Celestia uses a 2-dimensional Reed-Solomon encoding scheme to encode the block data: every block data is split into shares, arranged in a matrix, and extended with parity data into a extended matrix by applying multiple times Reed-Solomon encoding.

Then, separate Merkle roots are computed for the rows and columns of the extended matrix; the Merkle root of these Merkle roots is used as the block data commitment in the block header.

To verify that the data is available, Celestia light nodes are sampling the data shares.

Every light node randomly chooses a set of unique coordinates in the extended matrix and queries bridge nodes for the data shares and the corresponding Merkle proofs at those coordinates. If light nodes receive a valid response for each sampling query, then there is a high probability guarantee that the whole block’s data is available.

Additionally, every received data share with a correct Merkle proof is gossiped to the network. As a result, as long as the Celestia light nodes are sampling together enough data shares (i.e., at least unique shares), the full block can be recovered by honest bridge nodes.

For more details on DAS, take a look at the original paper .

Scalability

DAS enables Celestia to scale the DA layer. DAS can be performed by resource-limited light nodes since each light node only samples a small portion of the block data. The more light nodes there are in the network, the more data they can collectively download and store.

This means that increasing the number of light nodes performing DAS allows for larger blocks (i.e., with more transactions), while still keeping DAS feasible for resource-limited light nodes. However, in order to validate block headers, Celestia light nodes need to download the intermediate Merkle roots.

For a block data size of bytes, this means that every light node must download bytes. Therefore, any improvement in the bandwidth capacity of Celestia light nodes has a quadratic effect on the throughput of Celestia’s DA layer.

Fraud proofs of incorrectly extended data

The requirement of downloading the intermediate Merkle roots is a consequence of using a 2-dimensional Reed-Solomon encoding scheme. Alternatively, DAS could be designed with a standard (i.e., 1-dimensional) Reed-Solomon encoding, where the original data is split into shares and extended with additional shares of parity data. Since the block data commitment is the Merkle root of the resulting data shares, light nodes no longer need to download bytes to validate block headers.

The downside of the standard Reed-Solomon encoding is dealing with malicious block producers that generate the extended data incorrectly.

This is possible as Celestia does not require a majority of the consensus (i.e., block producers) to be honest to guarantee data availability. Thus, if the extended data is invalid, the original data might not be recoverable, even if the light nodes are sampling sufficient unique shares (i.e., at least for a standard encoding and for a 2-dimensional encoding).

As a solution, Fraud Proofs of Incorrectly Generated Extended Data enable light nodes to reject blocks with invalid extended data. Such proofs require reconstructing the encoding and verifying the mismatch. With standard Reed-Solomon encoding, this entails downloading the original data, i.e., n² bytes. Contrastingly, with 2-dimensional Reed-Solomon encoding, only bytes are required as it is sufficient to verify only one row or one column of the extended matrix.

Namespaced Merkle trees (NMTs)

Celestia partitions the block data into multiple namespaces, one for every application (e.g., rollup) using the DA layer. As a result, every application needs to download only its own data and can ignore the data of other applications.

For this to work, the DA layer must be able to prove that the provided data is complete, i.e., all the data for a given namespace is returned. To this end, Celestia is using Namespaced Merkle trees (NMTs).

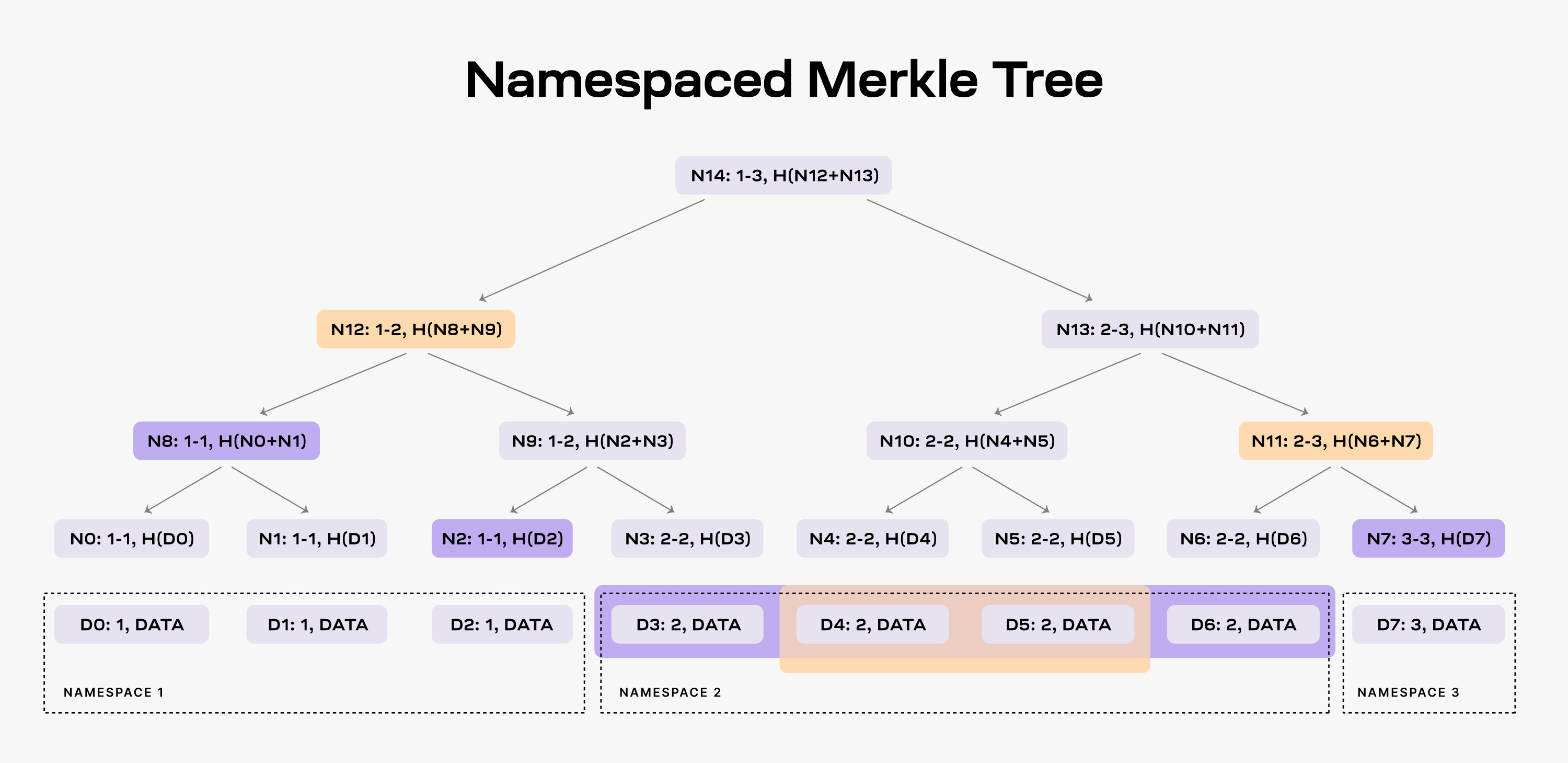

An NMT is a Merkle tree with the leaves ordered by the namespace identifiers and the hash function modified so that every node in the tree includes the range of namespaces of all its descendants. The following figure shows an example of an NMT with height three (i.e., eight data shares). The data is partitioned into three namespaces.

When an application requests the data for namespace 2, the DA layer must

provide the data shares D3, D4, D5, and D6 and the nodes N2, N8

and N7 as proof (note that the application already has the root N14 from

the block header).

As a result, the application is able to check that the provided data is part

of the block data. Furthermore, the application can verify that all the data

for namespace 2 was provided. If the DA layer provides for example only the

data shares D4 and D5, it must also provide nodes N12 and N11 as proofs.

However, the application can identify that the data is incomplete by checking

the namespace range of the two nodes, i.e., both N12 and N11 have descendants

part of namespace 2.

For more details on NMTs, refer to the original paper .

Building a PoS blockchain for DA

Providing data availability

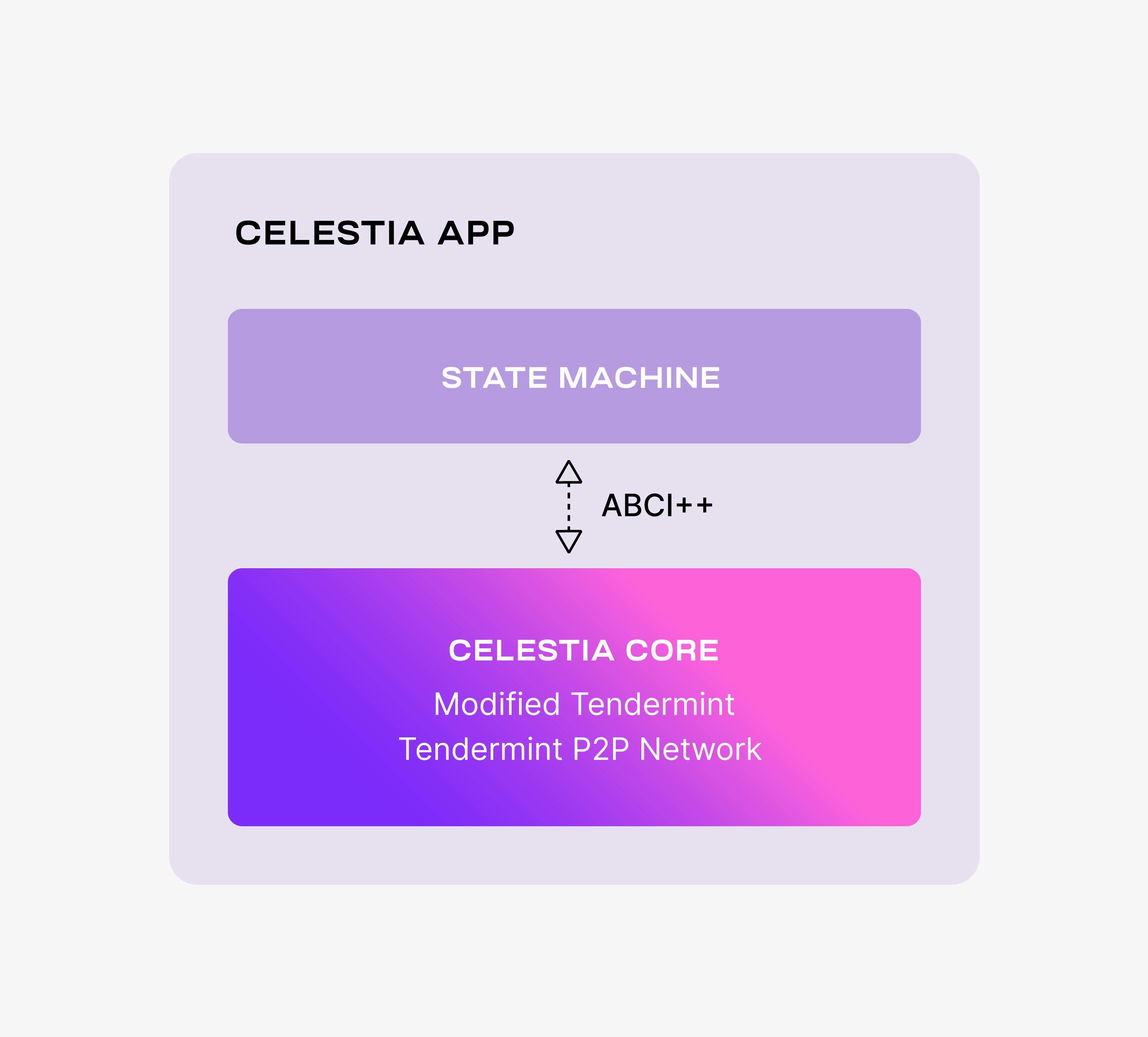

The Celestia DA layer consists of a PoS blockchain. Celestia is dubbing this blockchain as the celestia-app , an application that provides transactions to facilitate the DA layer and is built using Cosmos SDK . The following figure shows the main components of celestia-app.

celestia-app is built on top of celestia-core , a modified version of the Tendermint consensus algorithm . Among the more important changes to vanilla Tendermint, celestia-core:

- Enables the erasure coding of block data (using the 2-dimensional Reed-Solomon encoding scheme ).

- Replaces the regular Merkle tree used by Tendermint to store block data with a Namespaced Merkle tree that enables the above layers (i.e., execution and settlement) to only download the needed data (for more details, see the section below describing use cases).

For more details on the changes to Tendermint, take a look at the ADRs . Notice that celestia-core nodes are still using the Tendermint p2p network.

Similarly to Tendermint, celestia-core is connected to the application layer (i.e., the state machine) by ABCI++ , a major evolution of ABCI (Application Blockchain Interface).

The celestia-app state machine is necessary to execute the PoS logic and to enable the governance of the DA layer.

However, the celestia-app is data-agnostic — the state machine neither validates nor stores the data that is made available by the celestia-app.

Monolithic vs. modular blockchains

Blockchains instantiate replicated state machines : the nodes in a permissionless distributed network apply an ordered sequence of deterministic transactions to an initial state resulting in a common final state.

In other words, this means that nodes in a network all follow the same set of rules (i.e., an ordered sequence of transactions) to go from a starting point (i.e., an initial state) to an ending point (i.e., a common final state). This process ensures that all nodes in the network agree on the final state of the blockchain, even though they operate independently.

This means blockchains require the following four functions:

- Execution entails executing transactions that update the state correctly. Thus, execution must ensure that only valid transactions are executed, i.e., transactions that result in valid state machine transitions.

- Settlement entails an environment for execution layers to verify proofs, resolve fraud disputes, and bridge between other execution layers.

- Consensus entails agreeing on the order of the transactions.

- Data Availability (DA) entails making the transaction data available. Note that execution, settlement, and consensus require DA.

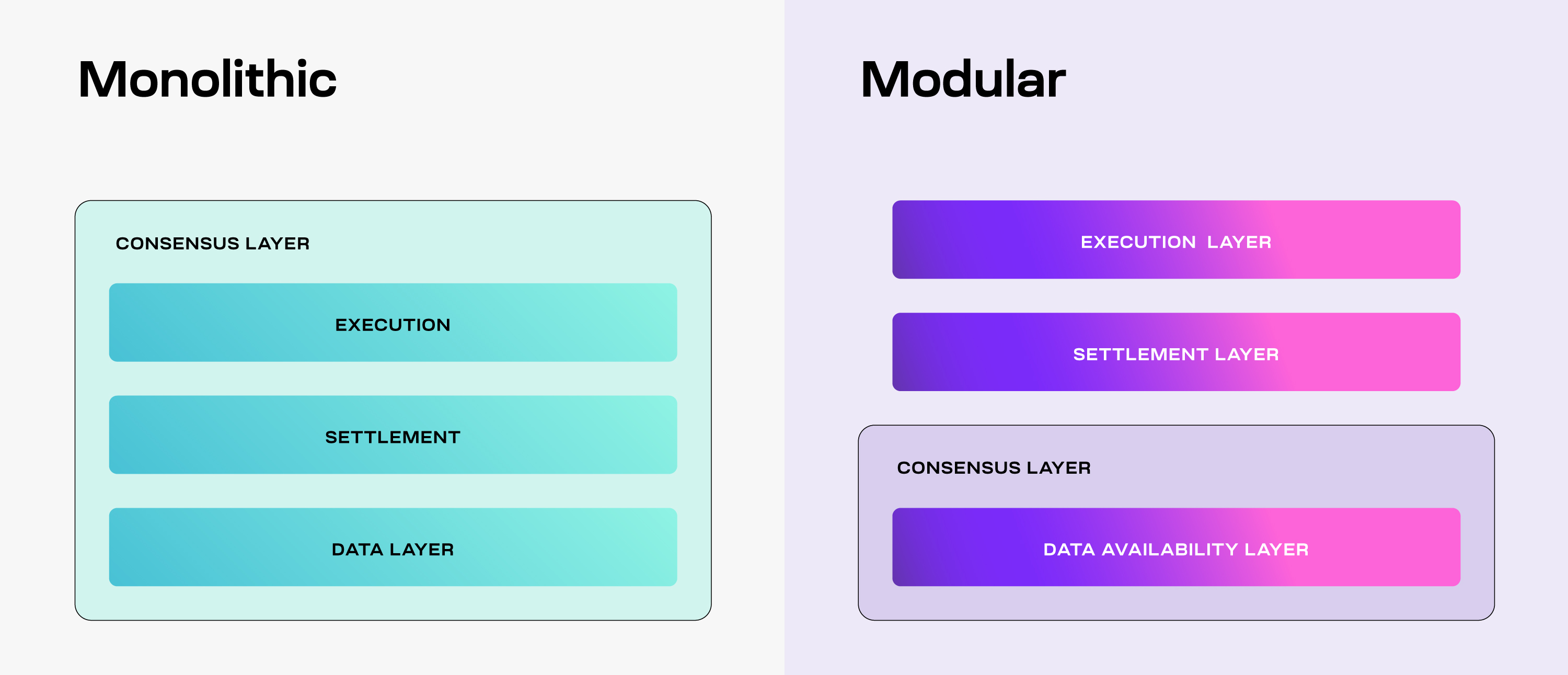

Traditional blockchains, i.e. monolithic blockchains, implement all four functions together in a single base consensus layer. The problem with monolithic blockchains is that the consensus layer must perform numerous different tasks, and it cannot be optimized for only one of these functions. As a result, the monolithic paradigm limits the throughput of the system.

As a solution, modular blockchains decouple these functions among multiple specialized layers as part of a modular stack. Due to the flexibility that specialization provides, there are many possibilities in which that stack can be arranged. For example, one such arrangement is the separation of the four functions into three specialized layers.

The base layer consists of DA and consensus and thus, is referred to as the Consensus and DA layer (or for brevity, the DA layer), while both settlement and execution are moved on top in their own layers. As a result, every layer can be specialized to optimally perform only its function, and thus, increase the throughput of the system. Furthermore, this modular paradigm enables multiple execution layers, i.e., rollups , to use the same settlement and DA layers.